2 Basic Cuisine: A Review on Probability and Frequentist Statistical Inference

The Importance of This Chapter

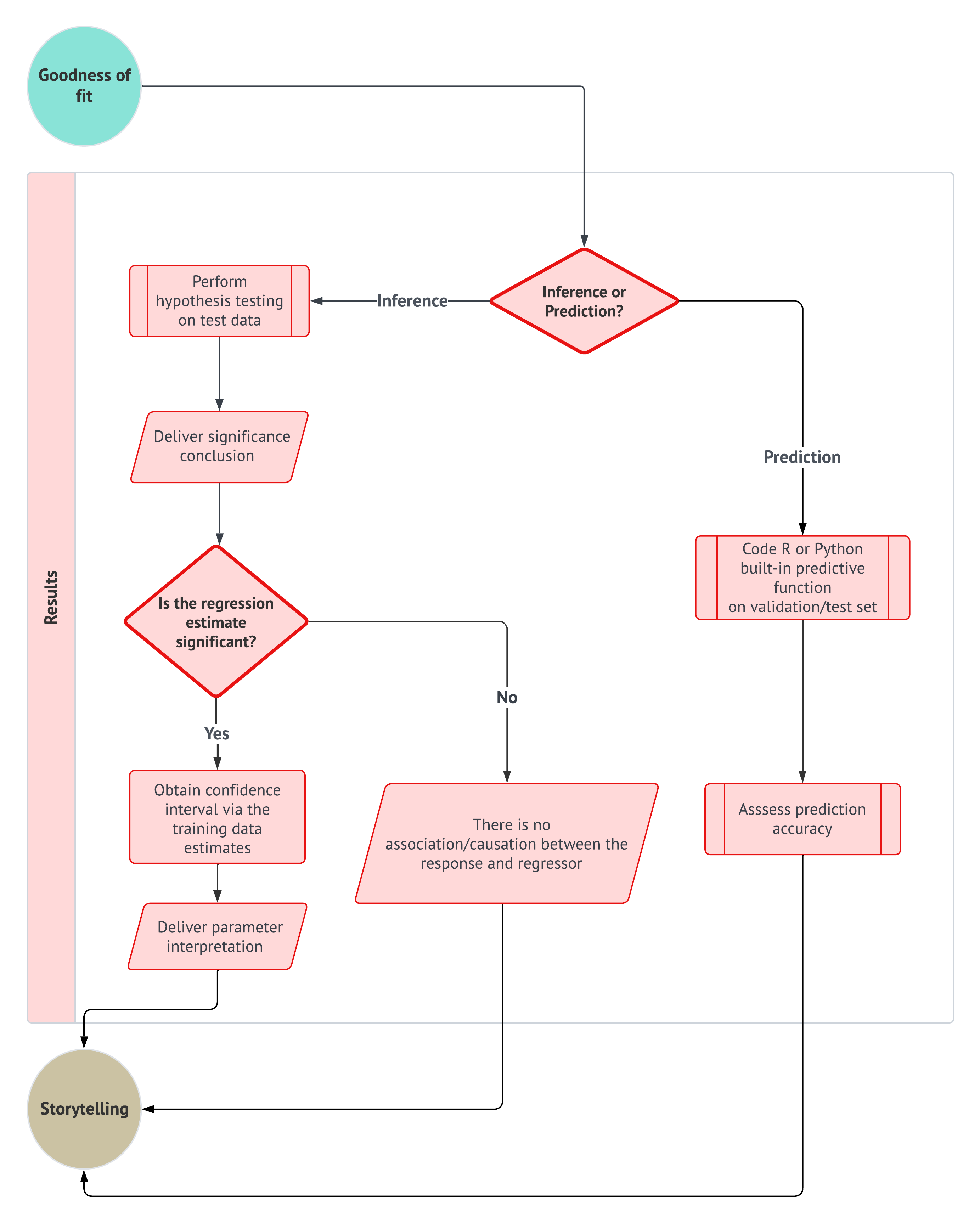

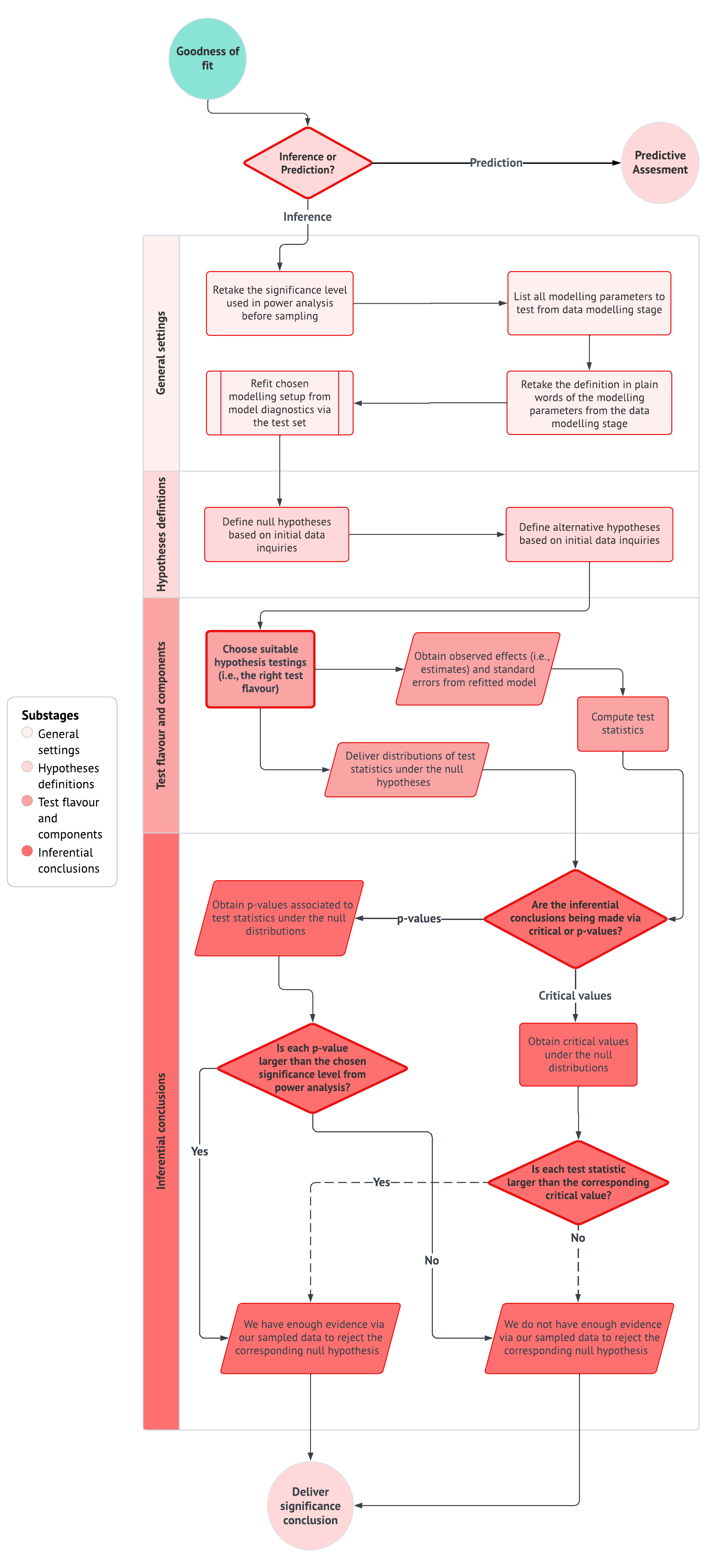

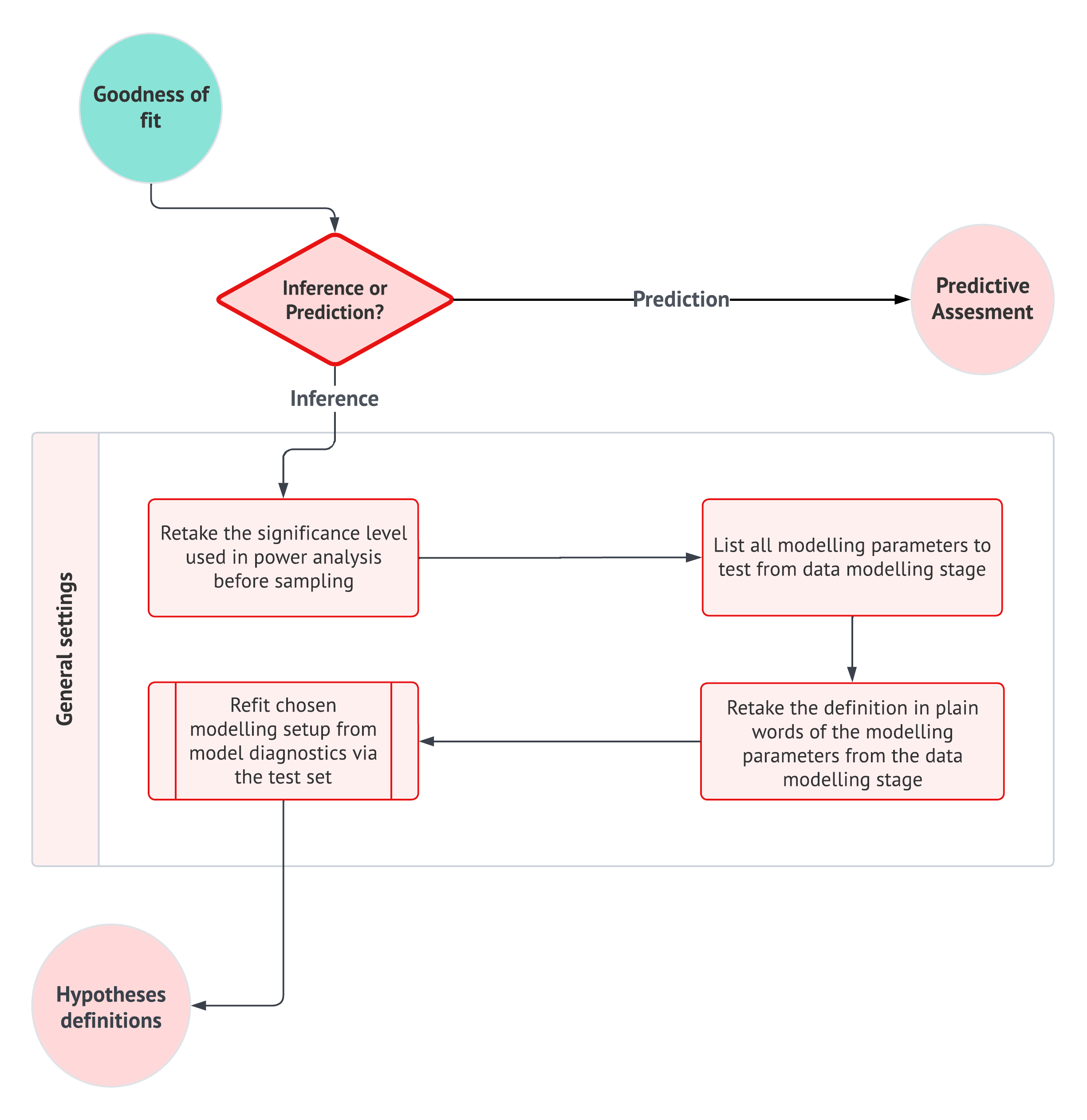

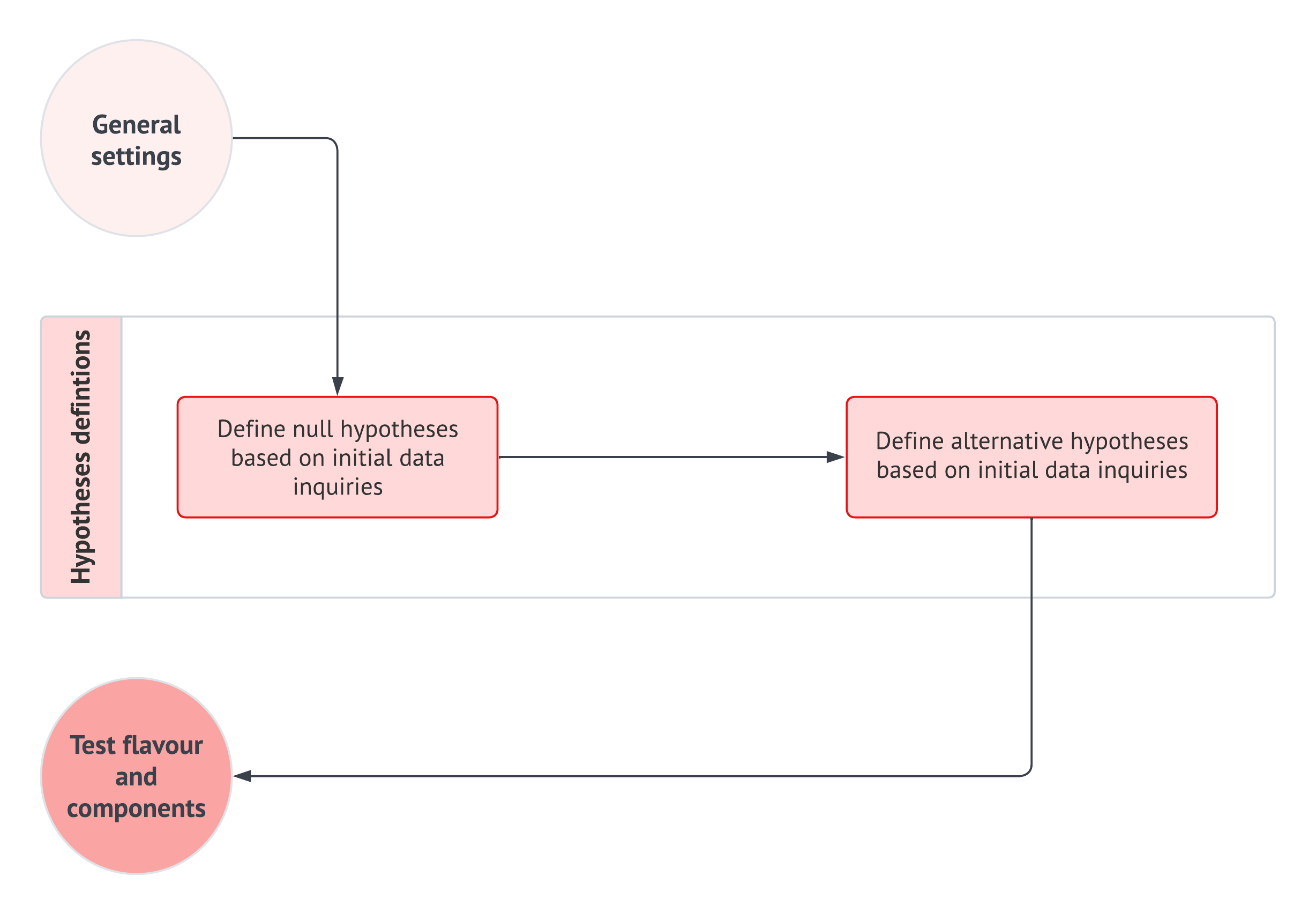

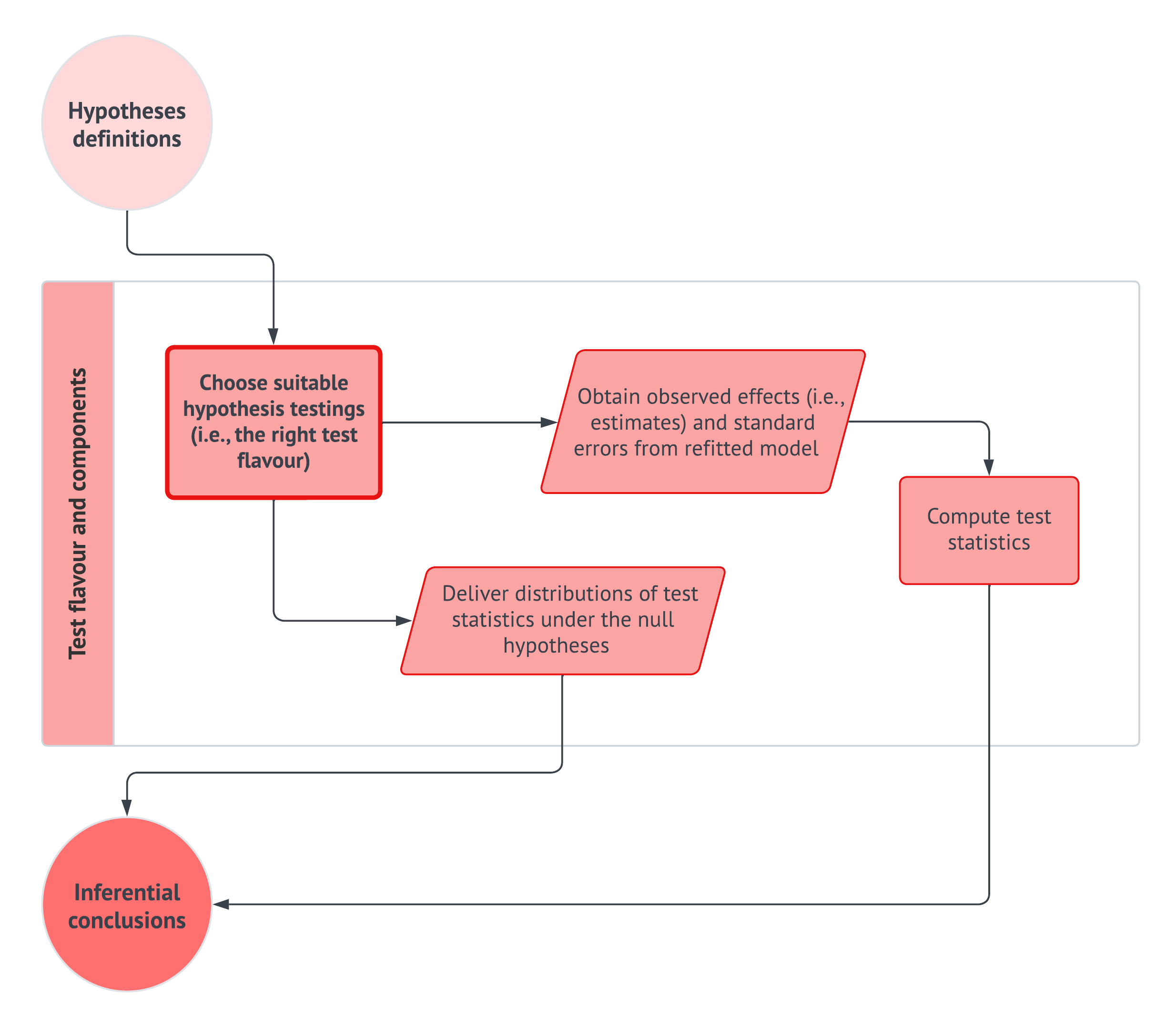

This chapter will delve into the fundamentals of probability and frequentist statistical inference. Moreover, this review will be important to understanding the philosophy of modelling parameter estimation as outlined in Section 1.2.5. Then, we will pave the way to the rationale behind statistical inference in the Results stage in our workflow from Figure 1.1. Note that we aim to explain all these statistical and probabilistic concepts in the most possible practical way via a made-up case study throughout this chapter (while still presenting useful theoretical admonitions as outlined in Chapter 1).

Learning Objectives

By the end of this chapter, you will be able to:

- Discuss why having a complete conceptual understanding of the process of statistical inference is key when conducting studies for general audiences.

- Explain why probability is the language of statistics.

- Recall foundational probabilistic insights.

- Break down the differences between the two schools of statistical thinking: frequentist and Bayesian.

- Apply the philosophy of generative modelling along with probability distributions in parameter estimation.

- Justify using measures of central tendency and uncertainty to characterize probability distributions.

- Illustrate how random sampling can be used in parameter estimation.

- Describe conceptually what maximum likelihood estimation entails in a frequentist framework.

- Formulate a maximum likelihood approach in parameter estimation.

- Outline the process of a frequentist classical-based hypothesis testing to solve inferential inquiries.

Let us start with a relatable story!

Imagine you are an undergraduate engineering student. Moreover, last term, you just took and passed your first course in probability and statistics (inference included) in an industrial engineering context. Moreover, as it could happen while taking an introductory course in probability and statistics, you used to feel quite overwhelmed by the large amount of jargon and formulas one had to grasp and use regularly for primary engineering fields such as quality control in a manufacturing facility. Population parameters, hypothesis testing, tests statistics, significance level, \(p\)-values, and confidence intervals were appearing here and there. And to your frustration, you could never find a statistical connection between all these inferential tools! Instead, you relied on mechanistic procedures when solving assignments or exam problems.

For instance, when performing hypothesis testing for a two-sample \(t\)-test, you struggled to reflect what the hypotheses were trying to indicate for the corresponding population parameters or how the test statistic was related to these hypotheses. Moreover, your interpretation of the resulting \(p\)-value and/or confidence interval was purely mechanical with the inherent claim:

With a significance level \(\alpha = 0.05\), we reject (or fail to reject, if that is the case) the null hypothesis in given that…

Truthfully, this whole mechanical way of doing statistics is not ideal in a teaching, research or industry environment. Along the same lines, the above situation should also not happen when we learn key statistical topics for the very first time as undergraduate students. That is why we will investigate a more intuitive way of viewing probability and its crucial role in statistical inference. This matter will help us deliver more coherent storytelling (as in Section 1.2.8) when presenting our results in practice during any regression analysis to our peers or stakeholders. Note that the role of probability also extends to model training (as in Section 1.2.5) when it comes to supervised learning and not just regarding statistical inference.

Having said all this, it is time to introduce a statement that is key when teaching hypothesis testing in an introductory statistical inference course:

In statistical inference, everything always boils down to randomness and how we can control it!

That is quite a bold statement! Nonetheless, once one starts presenting statistical topics to audiences not entirely familiar with the usual field jargon, the idea of randomness always persists across many different tools. And, of course, regression analysis is not an exception at all since it also involves inference on population parameters of interest. This is why we have allocated this chapter in the textbook to explain core probabilistic and inferential concepts to pave the way to its role in regression analysis.

Heads-up on why we mean as a non-ideal mechanical analysis!

The reader might need clarification on why the mechanical way of performing hypothesis testing is considered non-ideal, mainly when the term cookbook is used in the book’s title. The cookbook concept here actually refers to a homogenized recipe for data modelling, as seen in the workflow from Figure 1.1. However, there is a crucial distinction between this and the non-ideal mechanical way of hypothesis testing.

On the one hand, the non-ideal mechanical way refers to the use of a tool without understanding the rationale of what this tool stands for, resulting in vacuous and standard statements that we would not be able to explain any way further, such as the statement we previously indicated:

With a significance level \(\alpha = 0.05\), we reject (or fail to reject, if that is the case) the null hypothesis given that…

What if a stakeholder of our analysis asks us in plain words what a significance level means? Why are we phrasing our conclusion on the null hypothesis and not directly on the alternative one? As a data scientist, one should be able to explain why the whole inference process yields that statement without misleading the stakeholders’ understanding. For sure, this also implicates appropriate communication skills that cater to general audiences rather than just technical ones.

Conversely, the data modelling workflow in Figure 1.1 involves stages that necessitate a comprehensive and precise understanding of our analysis. Progressing to the next stage (without a complete grasp of the current one) risks perpetuating false insights, potentially leading to faulty data storytelling of the entire analysis.

Specifically, this chapter will review the following:

- The role of random variables and probability distributions and the governance of population (or system) parameters (i.e., the so-called Greek letters we usually see in statistical inference and regression analysis). Section 2.1 will explore these topics more in detail while connecting them to the subsequent inferential terrain under a frequentist context.

- When delving into supervised learning and regression analysis, we might wonder how randomness is incorporated into model fitting (i.e., parameter estimation). That is quite a fascinating aspect, implemented via a crucial statistical tool known as maximum likelihood estimation. This tool is heavily related to the concept of loss function in supervised learning. Section 2.2 will explore these matters in more detail and how the idea of a random sample is connected to this estimation tool.

- Section 2.3 will explore the basics of hypothesis testing and its intrinsic components such as null and alternative hypotheses, type I and type II errors, significance level, power, observed effect, standard error, test statistic, critical value, \(p\)-value, and confidence interval.

Without further ado, let us start with reviewing core concepts in probability via quite a tasty example.

2.1 Basics of Probability

In terms of regression analysis (either on an inferential or predictive framework), probability can be viewed as the solid foundation on which more complex tools, including estimation and hypothesis testing, are built upon. Having said that, let us scaffold across all the necessary probabilistic concepts that will allow us to move forward into these more complex tools.

2.1.1 First Insights

To start building up our solid probabilistic foundation, we assume our data is coming from a given population or system of interest. Moreover, the population or system is assumed to be governed by parameters which, as data scientists or researchers, they are of our best interest to study. That said, the terms population and parameter will pave the way to our first statistical definitions.

Definition of population

It is a whole collection of individuals or items that share distinctive attributes. As data scientists or researchers, we are interested in studying these attributes, which we assume are governed by parameters. In practice, we must be as specific as possible when defining our given population such that we would frame our entire data modelling process since its very early stages. Examples of a population could be the following:

- Children between the ages of 5 and 10 years old in states of the American West Coast.

- Customers of musical vinyl records in the Canadian provinces of British Columbia and Alberta.

- Avocado trees grown in the Mexican state of Michoacán.

- Adult giant pandas in the Southwestern Chinese province of Sichuan.

- Mature açaí palm trees from the Brazilian Amazonian jungle.

Note that the term population could be exchanged for the term system, given that certain contexts do not particularly refer to individuals or items. Instead, these contexts could refer to processes whose attributes are also governed by parameters. Examples of a system could be the following:

- The production of cellular phones from a given model in a set of manufacturing facilities.

- The sale process in the Vancouver franchises of a well-known ice cream parlour.

- The transit cycle during rush hours on weekdays in the twelve lines of Mexico City’s subway.

Definition of parameter

It is a characteristic (numerical or even non-numerical, such as a distinctive category) that summarizes the state of our population or system of interest. Examples of a population parameter can be described as follows:

- The average weight of children between the ages of 5 and 10 years old in states of the American west coast (numerical).

- The variability in the height of the mature açaí palm trees from the Brazilian Amazonian jungle (numerical).

- The proportion of defective items in the production of cellular phones in a set of manufacturing facilities (numerical).

- The average customer waiting time to get their order in the Vancouver franchises of a well-known ice cream parlour (numerical).

- The most favourite pizza topping of vegetarian adults between the ages of 30 and 40 years old in Edmonton (non-numerical).

Note the standard mathematical notation for population parameters are Greek letters (for more insights, you can check Appendix B). Moreover, in practice, these population parameter(s) of interest will be unknown to the data scientist or researcher. Instead, they would use formal statistical inference to estimate them.

The parameter definition points out a crucial fact in investigating any given population or system:

Our parameter(s) of interest are usually unknown!

Given this fact, it would be pretty unfortunate and inconvenient if we eventually wanted to discover any significant insights about the population or system. Therefore, let us proceed to our so-called tasty example so we can dive into the need for statistical inference and why probability is our perfect ally in this parameter quest.

Imagine you are the owner of a large fleet of ice cream carts, around 900 to be exact. These ice cream carts operate across different parks in the following Canadian cities: Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal. In the past, to optimize operational costs, you decided to limit ice cream cones to only two items: vanilla and chocolate flavours, as in Figure 2.1.

Now, let us direct this whole case onto a more statistical and probabilistic field; suppose you have a well-defined overall population of interest for those above eight Canadian cities: children between 4 and 11 years old attending these parks during the Summer weekends. Of course, Summer time is coming this year, and you would like to know which ice cream cone flavour is the favourite one for this population (and by how much!). As a business owner, investigating ice cream flavour preferences would allow you to plan Summer restocks more carefully with your corresponding suppliers. Therefore, it would be essential to start collecting consumer data so the company can tackle this demand query.

Also, suppose there is a second query. For the sake of our case, we will call it a time query. As a critical component of demand planning, besides estimating which cone flavour is the most preferred one (and by how much!) for the above population of interest, the operations area is currently requiring a realistic estimation of the average waiting time from one customer to the next one in any given cart during Summer weekends. This average waiting time would allow the operations team to plan carefully how much stock each cart should have so there will not be any waste or shortage.

Note that the time query is related to a different population from the previous query. Therefore, we can define it as all our ice cream customers during the Summer weekends and not just all the children between 4 and 11 years old attending the parks during Summer weekends. Consequently, it is crucial to note that the nature of our queries will dictate how we define our population and our subsequent data modelling and statistical inference.

Summer time represents the most profitable season from a business perspective, thus solving these above two queries is a significant priority for your company. Hence, you decide to organize a meeting with your eight general managers (one per Canadian city). Finally, during the meeting with the general managers, it was decided to do the following:

- For the demand query, a comprehensive market study will be run on the population of interest across the eight Canadian cities right before next Summer; suppose we are currently in Spring.

- For the time query, since the operations team has not previously recorded any historical data (surprisingly!), all vendor staff from the 900 carts will start collecting data on the waiting time in seconds between each customer this upcoming Summer.

When discussing study requirements for the marketing firm who would be in charge of it for the demand query, Vancouver’s general manager dares to state the following:

Since we’re already planning to collect consumer data on these cities, let’s mimic a census-type study to ensure we can have the most precise results on their preferences.

On the other hand, when agreeing on the specific operations protocol to start recording waiting times for all the 900 vending carts this upcoming Summer, Ottawa’s general manager provides a comment for further statistical food for thought:

The operations protocol for recording waiting times in the 900 vending carts looks too cumbersome to implement straightforwardly this upcoming Summer. Why don’t we select a smaller set of waiting times between two general customers across the 900 ice cream carts in the eight cities to have a more efficient process implementation that would allow us to optimize operational costs?

Bingo! Ottawa’s general manager just nailed the probabilistic way of making inference on our population parameter of interest for the time query. Indeed, their comment was primarily framed from a business perspective of optimizing operational costs. Still, this fact does not take away a crucial insight on which statistical inference is built: a random sample (as in its corresponding definition). As for Vancouver’s general manager, their proposal is not feasible. Mimicking a census-type study might not be the most optimal decision for the demand query given the time constraint and the potential size of its target population.

Heads-up on the use random sampling with probabilistic foundations!

Let us clarify things from the start, especially from a statistical perspective:

Realistically, there is no cheap and efficient way to conduct a census-type study for either of the two queries.

We must rely on probabilistic random sampling, selecting two small subsets of individuals from our two populations of interest. This approach allows us to save both financial and operational resources compared to conducting a complete census. However, random sampling requires us to use various probabilistic and inferential tools to manage and report the uncertainty associated with the estimation of the corresponding population parameters, which will help us answer our initial main queries.

Therefore, having said all this, let us assume that in this ice cream case, the company decided to go ahead with random sampling to answer both queries.

Moving on to one of the core topics in this chapter, we can state that probability is viewed as the language to decode random phenomena that occur in any given population or system of interest. In our example, we have two random phenomena:

- For the demand query, a phenomenon can be represented by the preferred ice cream cone flavour of any randomly selected child between 4 and 11 years old attending the parks of the above eight Canadian cities during the Summer weekends.

- Regarding the time query, a phenomenon of this kind can be represented by any randomly recorded waiting time between two customers during a Summer weekend in any of the above eight Canadian cities across the 900 ice cream carts.

Now, let us finally define what we mean by probability along with the inherent concept of sample space.

Definition of probability

Let \(A\) be an event of interest in a random phenomenon of a population or system of interest, whose all possible outcomes belong to a given sample space \(S\). Generally, the probability for this event \(A\) happening can be mathematically depicted as \(P(A)\). Moreover, suppose we observe the random phenomenon \(n\) times such as we were running some class of experiment, then \(P(A)\) is defined as the following ratio:

\[ P(A) = \frac{\text{Number of times event $A$ is observed}}{n}, \tag{2.1}\]

as the \(n\) times we observe the random phenomenon goes to infinity.

Equation 2.1 will always put \(P(A)\) in the following numerical range:

\[ 0 \leq P(A) \leq 1. \]

Definition of sample space

Let \(A\) be an event of interest in a random phenomenon of a population or system of interest. The sample space \(S\) of event \(A\) denotes the set of all the possible random outcomes we might encounter every time we randomly observe \(A\) such as we were running some class of experiment.

Note each of these outcomes has a determined probability associated with them. If we add up all these probabilities, the probability of the sample space \(S\) will be one, i.e.,

\[ P(S) = 1. \tag{2.2}\]

2.1.2 Schools of Statistical Thinking

Note the above definition for the probability of an event \(A\) specifically highlights the following:

… as the \(n\) times we observe the random phenomenon goes to infinity.

The “infinity” term is key when it comes to understanding the philosophy behind the frequentist school of statistical thinking in contrast to its Bayesian counterpart. In general, the frequentist way of practicing statistics in terms of probability and inference is the approach we usually learn in introductory courses, more specifically when it comes to hypothesis testing and confidence intervals which will be explored in Section 2.3. That said, the Bayesian approach is another way of practicing statistical inference. Its philosophy differs in what information is used to infer our population parameters of interest. Below, we briefly define both schools of thinking.

Definition of frequentist statistics

This statistical school of thinking heavily relies on the frequency of events to estimate specific parameters of interest in a population or system. This frequency of events is reflected in the repetition of \(n\) experiments involving a random phenomenon within this population or system.

Under the umbrella of this approach, we assume that our governing parameters are fixed. Note that, within the philosophy of this school of thinking, we can only make precise and accurate predictions as long as we repeat our \(n\) experiments as many times as possible, i.e.,

\[ n \rightarrow \infty. \]

Definition of Bayesian statistics

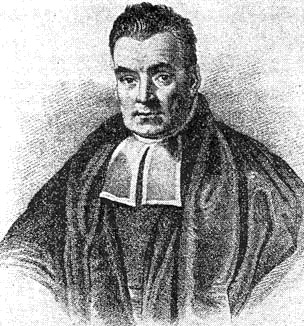

This statistical school of thinking also relies on the frequency of events to estimate specific parameters of interest in a population or system. Nevertheless, unlike frequentist statistics, Bayesian statisticians use prior knowledge on the population parameters to update their estimations on them along with the current evidence they can gather. This evidence is in the form of the repetition of \(n\) experiments involving a random phenomenon. All these ingredients allow Bayesian statisticians to make inference by conducting appropriate hypothesis testings, which are designed differently from their mainstream frequentist counterpart.

Under the umbrella of this approach, we assume that our governing parameters are random; i.e., they have their own sample space and probabilities associated to their corresponding outcomes. The statistical process of inference is heavily backed up by probability theory mostly in the form of the Bayes’ rule (named after Reverend Thomas Bayes, an English statistician from the 18th century). This rule uses our current evidence along with our prior beliefs to deliver a posterior distribution of our random parameter(s) of interest.

Let us put the definitions for these two schools of statistical thinking into a more concrete example. We can use the demand query from our ice cream case as a starting point. More concretely, we can dig more into a standalone population parameter such as the probability that a randomly selected child between 4 and 11 years old, attending the parks of the above eight Canadian cities during the Summer weekends, prefers the chocolate-flavoured ice cream cone over the vanilla one. Think about the following two hypothetical questions:

- From a frequentist point of view, what is the estimated probability of preferring chocolate over vanilla after randomly surveying \(n = 100\) children from our population of interest?

- Using a Bayesian approach, suppose the marketing team has found ten prior market studies on similar children populations on their preferred ice cream flavour (between chocolate and vanilla). Therefore, along with our actual random survey of \(n = 100\) children from our population of interest, what is the posterior estimation of the probability of preferring chocolate over vanilla?

By comparing the above (a) and (b), we can see one characteristic in common when it comes to the estimation of the probability of preferring chocolate over vanilla: both frequentist and Bayesian approaches rely on the gathered evidence coming from the random survey of \(n = 100\) children from our population of interest. On the one hand, the frequentist approach solely relies on observed data to estimate this single probability of preferring chocolate over vanilla. On the other hand, the Bayesian approach uses the observed data in conjunction with the prior knowledge provided by the ten estimated probabilities to deliver a whole posterior distribution (i.e., the posterior estimation) of the probability of preferring chocolate over vanilla.

Heads-up on the debate between frequentist and Bayesian statistics!

Even though most of us began our statistical journey in a frequentist framework, we might be tempted to state that a Bayesian paradigm for parameter estimation and inference is better than a frequentist one since the former only takes into account the observed evidence without the prior knowledge on our parameters of interest.

In the statistical community, there could be a fascinating debate between the pros and cons of each school of thinking. That said, it is crucial to state that no paradigm is considered wrong! Instead, using a pragmatic strategy of performing statistics according to our specific context is more convenient.

Tip on further Bayesian and frequentist insights!

Let us check the following two examples (aside from our ice cream case) to illustrate the above pragmatic way of doing things:

- Take the production of cellular phones from a given model in a set of manufacturing facilities as the context. Hence, one might find a frequentist estimation of the proportion of defective items as a quicker and more efficient way to correct any given manufacturing process. That is, we will sample products from our finalized batches and check their status (defective or non-defective, our observed evidence) to deliver a proportion estimation of defective items.

- Now, take a physician’s context. It would not make a lot of sense to study the probability that a patient develops a certain disease by only using a frequentist approach, i.e., looking at the current symptoms which account for the observed evidence. In lieu, a Bayesian approach would be more suitable to study this probability which uses the observed evidence combined with the patient’s history (i.e., the prior knowledge) to deliver our posterior belief on the disease probability.

Having said all this, it is important to reiterate that the focus of this textbook is purely frequentist in regards to data modelling in regression analysis. If you would like to explore the fundamentals of the Bayesian paradigm; Johnson, Ott, and Dogucu (2022) have developed an amazing textbook on the basic probability theory behind this school of statistical thinking along with a whole variety regression techniques including the parameter estimation rationale.

2.1.3 The Random Variables

As we continue our frequentist quest to review the probabilistic insights related to parameter estimation and statistical inference, we will focus on our ice cream case while providing a comprehensive array of definitions. Many of these definitions are inspired by the work of Casella and Berger (2024) and Soch et al. (2024).

Each time we introduce a new probabilistic or statistical concept, we will apply it immediately to this ice cream case, allowing for hands-on practice that meets the learning objectives of this chapter. It is important to pay close attention to the definition and heads-up admonitions, as they are essential for fully understanding how these concepts apply to the ice cream case. On the other hand, the tip admonitions are designed to offer additional theoretical insights that may interest you, but they can be skipped if you prefer.

| Demand Query | Time Query | |

|---|---|---|

| Statement | We would like to know which ice cream flavour is the favourite one (either chocolate or vanilla) and by how much. | We would like to know the average waiting time from one customer to the next one in any given ice cream cart. |

| Population of interest | Children between 4 and 11 years old attending different parks in Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends. | All our general customer-to-customer waiting times in the different parks of Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends across the 900 ice cream carts. |

| Parameter | Proportion of individuals from the population of interest who prefer the chocolate flavour versus the vanilla flavour. | Average waiting time from one customer to the next one. |

Table 2.1 presents the general statements and populations of interest derived from our two queries: demand and time. It is important to note that these general statements are based on the storytelling we initiated in Section 2.1.1. In practice, summarizing the overarching statistical problem is essential. This will enable us to translate the corresponding issue into a specific statement and population, from which we can define the parameters we aim to estimate later in our statistical process.

Now, recall that in our initial meeting with the general managers, Ottawa’s general manager provided valuable statistical insights regarding the foundation of a random sample. For the time query, they suggested selecting a smaller set of waiting times between two general customers across the 900 ice cream carts. We already addressed this process as sampling, more specifically random sampling in technical language.

Similarly, we can apply this concept to the demand query by selecting a subgroup of children aged 4 to 11 who are visiting different parks in these eight cities. Then, we can ask them about their favorite ice cream flavour, specifically whether they prefer chocolate or vanilla. It is important to note that we are not conducting any census-type studies; instead, we are carrying out two studies that heavily rely on sampling to estimate population parameters.

Furthermore, we want to ensure that our two groups of observations—both children and waiting times—are representative of their respective populations. So, how can we achieve this? The baseline key is through what we call simple random sampling. This process involves the following per query:

- For the demand query, let us assume there are \(N_d\) observations in our population of interest. In a simple random sampling scheme with replacement, our random sample will consist of \(n_d\) observations (noting that \(n_d << N_d\)), each having the same probability of being selected for our estimation and inferential purposes, which is given by \(\frac{1}{N_d}\).

- For the time query, assume there are \(N_t\) observations in our population of interest. Again, in a simple random sampling scheme with replacement, our random sample will consist of \(n_t\) observations (noting that \(n_t << N_t\)), each having the same probability of selection for estimation and inferential purposes, which is \(\frac{1}{N_t}\).

Heads-up on sampling with replacement!

Keep in mind that sampling with replacement means you return any specific drawn observation back to the corresponding population before the next draw.

Tip of further sampling techniques!

If you want to explore additional and more complex sampling techniques besides simple random sampling, Section 1.2.2 provides further details and an external resource.

We can observe the concept of randomness reflected throughout the sampling schemes mentioned above. This aligns with what we referred to as random phenomena in both queries back in Section 2.1.1. Consequently, there should be a way to mathematically represent these phenomena, and the random variable is the starting point in this process.

Definition of random variable

A random variable is a function where the input values correspond to real numbers assigned to events belonging to the sample space \(S\), and whose outcome is one of these real numbers after executing a given random experiment. For instance, a random variable (and its support, i.e., real numbers) is depicted with an uppercase such that

\[Y \in \mathbb{R}.\]

To begin experimenting with random variables in this ice cream case, we need to define them. It is important to be as clear as possible when defining random variables, and we should also remember to use uppercase letters as follows:

\[ \begin{align*} D_i &= \text{A favourite ice cream flavour of a randomly surveyed $i$th child} \\ & \qquad \text{between 4 and 11 years old attending the parks of} \\ & \qquad \text{Vancouver, Victoria, Edmonton, Calgary,} \\ & \qquad \text{Winnipeg, Ottawa, Toronto, and Montréal} \\ & \qquad \text{during the Summer weekends} \\ & \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \text{for $i = 1, \dots, n_d.$} \\ \\ T_j &= \text{A randomly recorded $j$th waiting time in minutes between two} \\ & \qquad \text{customers during a Summer weekend in any of the above} \\ & \qquad \text{eight Canadian cities across the 900 ice cream carts} \\ & \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \text{for $j = 1, \dots, n_t.$} \\ \end{align*} \]

Note that the demand query corresponds to the \(i\)th random variable \(D_i\), where the subindex \(i\) ranges from \(1\) to \(n_d\). The term \(n_d\) represents the sample size for this query and theoretically indicates the number of random variables we intend to observe from our population of interest during our sampling. On the other hand, for the time query, we have the \(j\)th random variable \(T_j\), with the subindex \(j\) ranging from \(1\) to \(n_t\). In the context of this query, \(n_t\) denotes the sample size and indicates how many random variables we plan to observe from our population of interest as part of our sampling.

Now, \(D_i\) will require real numbers that correspond to potential outcomes derived from the specific demand sample space of ice cream flavour. It is crucial to note that a given child from our population may prefer a flavour other than chocolate or vanilla—for example, strawberry, salted caramel, or pistachio. However, we are limited by our available flavour menu as a company. Therefore, we will restrict our survey question regarding these potential \(n_d\) surveyed children as follows:

\[ d_i = \begin{cases} 1 \qquad \text{The surveyed child prefers chocolate.}\\ 0 \qquad \text{Otherwise.} \end{cases} \tag{2.3}\]

In the modelling associated with Equation 2.3, an observed random variable \(d_i\) (thus, the lowercase) can only yield values of \(1\) if the surveyed child prefers chocolate and \(0\) otherwise. The term “otherwise” refers to any flavour other than chocolate, which, in our limited menu context, is vanilla.

To define the real numbers from a given waiting time sample space, associated with an observed random variable \(t_j\) (thus, the lowercase) measured in minutes, we need to establish a possible range for these waiting times. It would not make sense to have observed negative waiting times in this ice cream scenario; therefore, our lower bound for this range of potential values should be \(0\) minutes. However, we cannot set an upper limit on these waiting times since any ice cream vendor might need to wait for \(1, 2, 3, \ldots, 10, \ldots, 20, \ldots, 60, \ldots\) minutes for the next customer to arrive. In fact, it is possible to wait for a very long time, especially on a low sales day! Thus, the range of this observed random variable can be expressed as:

\[ t_j \in [0, \infty), \]

where the \(\infty\) symbol indicates no upper bound.

After defining the possible values for our two random variables \(D_i\) and \(T_j\), we will now classify them correctly using further probabilistic definitions as shown below.

Definition of discrete random variable

Let \(Y\) be a random variable whose support is \(\mathcal{Y}\). If this support \(\mathcal{Y}\) corresponds to a finite set or a countably infinite set of possible values, then \(Y\) is considered a discrete random variable.

For instance, we can encounter discrete random variables which could be classified as

- binary (i.e., a finite set of two possible values),

- categorical (either nominal or ordinal, which have a finite set of three or more possible values), or

- counts (which might have a finite set or a countably infinite set of possible values as integers).

Definition of continuous random variable

Let \(Y\) be a random variable whose support is \(\mathcal{Y}\). If this support \(\mathcal{Y}\) corresponds to an uncountably infinite set of possible values, then \(Y\) is considered a continuous random variable.

Note a continuous random variable could be

- completely unbounded (i.e., its set of possible values goes from \(-\infty\) to \(\infty\) as in \(-\infty < y < \infty\)),

- positively unbounded (i.e., its set of possible values goes from \(0\) to \(\infty\) as in \(0 \leq y < \infty\)),

- negatively unbounded (i.e., its set of possible values goes from \(-\infty\) to \(0\) as in \(-\infty < y \leq 0\)), or

- bounded between two values \(a\) and \(b\) (i.e., its set of possible values goes from \(a\) to \(b\) as in \(a \leq y \leq b\)).

Therefore, we can classify our two random variables as follows:

- For the demand query, the support of \(D_i\) (denoted as \(\mathcal{D}\)) is a countable finite set with two possible values: \(d_i \in \{0, 1\}\), as noted by Equation 2.3. Therefore, \(D_i\) is categorized as a binary discrete random variable.

- For the time query, the support of \(T_j\) (denoted as \(\mathcal{T}\)) is positively unbounded. This results in an uncountably infinite set of values that \(T_j\) can take, including (but not limited to) \(0, \dots, 0.01, \ldots, 0.02, \ldots, 0.00234, \ldots, 1, \ldots, 1.5576, \ldots\) minutes. Therefore, \(T_j\) is classified as a positively unbounded continuous random variable.

So far, we have successfully translated our two statistical queries into proper random variables, along with clear definitions and classifications derived from our problem statements, as well as the populations of interest, as noted in Table 2.1. However, we still need to find a way to include our parameters. The upcoming section will allow us to do that.

2.1.4 The Wonders of Generative Modelling and Probability Distributions

Before exploring the wonders of generative models, let us introduce Table 2.2, an extension of Table 2.1 that now includes the elements discussed in Section 2.1.3.

| Demand Query | Time Query | |

|---|---|---|

| Statement | We would like to know which ice cream flavour is the favourite one (either chocolate or vanilla) and by how much. | We would like to know the average waiting time from one customer to the next one in any given ice cream cart. |

| Population of interest | Children between 4 and 11 years old attending different parks in Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends. | All our general customer-to-customer waiting times in the different parks of Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends across the 900 ice cream carts. |

| Parameter | Proportion of individuals from the population of interest who prefer the chocolate flavour versus the vanilla flavour. | Average waiting time from one customer to the next one. |

| Random variable | \(D_i\) for \(i = 1, \dots, n_d\). | \(T_j\) for \(j = 1, \dots, n_t\). |

| Random variable definition | A favourite ice cream flavour of a randomly surveyed \(i\)th child between 4 and 11 years old attending the parks of Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during the Summer weekends. | A randomly recorded \(j\)th waiting time in minutes between two customers during a Summer weekend across the 900 ice cream carts found in Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal. |

| Random variable type | Discrete and binary. | Continuous and positively unbounded. |

| Random variable support | \(d_i \in \{ 0, 1\}\) as in Equation 2.3. | \(t_j \in [0, \infty).\) |

Having summarized all our probabilistic elements in Table 2.2, the parameters of interest must come into play for our data modelling game. Hence, the question is:

Is there any feasible way to do so via the foundations of random variables?

The answer lies in what we call a generative model, for which we have a whole toolbox corresponding to another important concept called probability distributions, as shown below.

Definition of generative model

Suppose you observe some data \(y\) from a population or system of interest. Moreover, let us assume this population or system is governed by \(k\) parameters contained in the following vector:

\[ \boldsymbol{\theta} = (\theta_1, \theta_2, \cdots, \theta_k)^T. \]

If we state that the random variable \(Y\) follows certain probability distribution \(\mathcal{D}(\cdot),\) then we will have a generative model \(m\) such that

\[ \text{$m$: } Y \sim \mathcal{D}(\boldsymbol{\theta}). \]

Definition of probability distribution

When we set a random variable \(Y\), we also set a new set of \(v\) possible outcomes \(\mathcal{Y} = \{ y_1, \dots, y_v\}\) coming from the sample space \(S\). This new set of possible outcomes \(\mathcal{Y}\) corresponds to the support of the random variable \(Y\) (i.e., all the possible values that could be taken on once we execute a given random experiment involving \(Y\)).

That said, let us suppose we have a sample space of \(u\) elements defined as

\[ S = \{ s_1, \dots, s_u \}, \]

where each one of these elements has a probability assigned via a function \(P_S(\cdot)\) such that

\[ P(S) = \sum_{i = 1}^u P_S(s_i) = 1. \]

which has to satisfy Equation 2.2.

Then, the probability distribution of \(Y\), i.e., \(P_Y(\cdot)\) assigns a probability to each observed value \(Y = y_j\) (with \(j = 1, \dots, v\)) if and only if the outcome of the random experiment belongs to the sample space, i.e., \(s_i \in S\) (for \(i = 1, \dots, u\)) such that \(Y(s_i) = y_j\):

\[ P_Y(Y = y_j) = P \left( \left\{ s_i \in S : Y(s_i) = y_j \right\} \right). \]

Since we have two different queries, we will use two instances of generative models. It is worth noting that more complex modelling could refer to a single generative model. However, for the purposes of this review chapter, we will keep it simple with via two separate generative models.

Now, let us introduce a specific notation for our discussion: the Greek alphabet. Greek letters are frequently used to statistically represent population parameters in modelling setups, estimation, and statistical inference. These letters will be quite useful for our parameters in this ice cream case.

Tip on the Greek alphabet in statistics!

In the early stages of learning statistical modelling, including concepts such as regression analysis, it is common to feel overwhelmed by unfamiliar letters and terminology. Whenever confusion arises in any of the main chapters of this book regarding these letters, we recommend referring to the Greek alphabet found in Appendix B. It is important to note that frequentist statistical inference primarily uses lowercase letters. With consistent practice over time, you will likely memorize most of this alphabet.

Let us retake the row corresponding to parameters in Table 2.2 and assign their Greek letters:

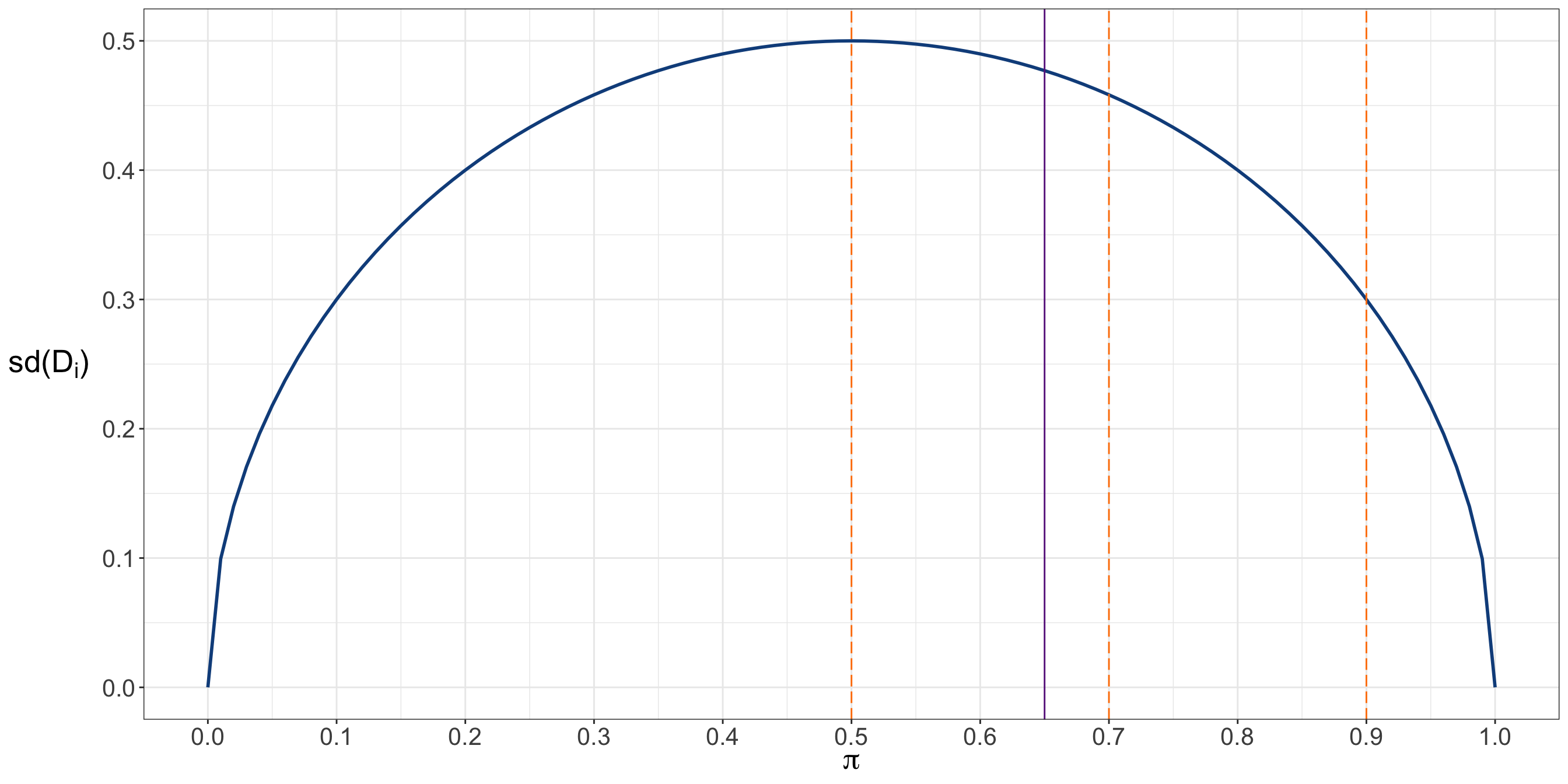

- For the demand query, we are interested in the parameter \(\pi\), which represents the proportion of individuals from the children population who prefer the chocolate flavour over the vanilla flavour. It is crucial to note that a proportion is always bounded between \(0\) and \(1\), similar to how probabilities function. For instance, a proportion of \(0.2\) would mean that \(20\%\) of the children in our population prefer chocolate flavour over vanilla. This definition establishes our demand query parameter as follows:

\[ \pi \in [0, 1]. \]

Heads-up on the use of \(\pi\)!

In this textbook, unless stated otherwise, the letter \(\pi\) will denote a population parameter and not the mathematical constant \(3.141592...\)

- For the time query, we are interested in the parameter \(\beta\), which represents the average waiting time in minutes from one customer to the next one in our population of interest. Unlike the above \(\pi\) parameter, \(\beta\) is only positively unbounded given the definition of our random variable \(T_j\). Therefore, this definition establishes our time query parameter as follows:

\[ \beta \in (0, \infty). \]

Having defined our parameters of interest with proper lowercase Greek letters, it is time to declare our corresponding generative models on a general basis. For the demand query, there will be a single parameter called \(\pi\), where the randomly surveyed child \(D_i\) will follow the model \(m_D\) such that

\[ \begin{gather*} m_D : D_i \sim \mathcal{D}_D(\pi) \\ \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \quad \text{for $i = 1, \dots, n_d.$} \end{gather*} \tag{2.4}\]

Now, for the time query, there will also be a single parameter called \(\beta\). Thus, the randomly recorded waiting time \(T_j\) will follow the model \(m_T\) such that

\[ \begin{gather*} m_T : T_j \sim \mathcal{D}_T(\beta) \\ \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \quad \text{for $j = 1, \dots, n_t.$} \end{gather*} \tag{2.5}\]

Nonetheless, we might wonder the following:

How can we determine the corresponding distributions \(\mathcal{D}_D(\pi)\) and \(\mathcal{D}_T(\beta)\)?

Of course the above definition of a probability distribution will come in handy to resolve this question. That said, given that we have two types of random variables (discrete and continuous), it is necessary to introduce two specific types of probability functions: probability mass function (PMF) and probability density function (PDF).

Definition of probability mass function (PMF)

Let \(Y\) be a discrete random variable whose support is \(\mathcal{Y}\). Moreover, suppose that \(Y\) has a probability distribution such that

\[ P_Y(Y = y) : \mathbb{R} \rightarrow [0, 1] \]

where, for all \(y \notin \mathcal{Y}\), we have

\[ P_Y(Y = y) = 0 \]

and

\[ \sum_{y \in \mathcal{Y}} P_Y(Y = y) = 1. \tag{2.6}\]

Then, \(P_Y(Y = y)\) is considered a PMF.

As we have discussed throughout this ice cream case, let us begin with the demand query. We have already defined the \(i\)th random variable \(D_i\) as discrete and binary. In statistical literature, certain random variables in common random processes can be modelled using what we call parametric families. We refer to these tools as parametric families because they are characterized by a specific set of parameters (in our case, each query has a single-element set, such as \(\pi\) or \(\beta\)).

Moreover, we call them families since each member corresponds to a particular value of our parameter(s). For instance, in our demand query, a chosen member could be where \(\pi = 0.8\) within the respective chosen parametric family to model our surveyed children. Other possible members could correspond to \(\pi = 0.2\), \(\pi = 0.4\) or \(\pi = 0.6\). In fact, the number of members in our chosen parametric family is infinite in this demand query!

Therefore, what parametric family can we choose for our demand query?

The question above introduces a new, valuable resource that is further elaborated upon in Appendix C. This resource outlines the various distributions that will be utilized in this textbook. In reality, the realm of parametric families—specifically, distributions—is quite extensive, and appendix material serves as only a brief overview of the many parametric families documented in statistical literature.

Tip on data modelling alternatives via different parametric families!

Any data model is simply an abstraction of reality, and different parametric families can provide various alternatives for modelling. In practice, we often need to select a specific family based on our particular inquiries and the conditions of our data. This process requires time and experience to master. Furthermore, it is important to note that different families are often interconnected!

If you wish to explore the world of univariate distribution families—which are used to model a single random variable—Leemis (n.d.) has created a comprehensive relational chart that covers 76 distinct probability distributions: 19 are discrete, and 57 are continuous. However, this chart does not encompass all the possible families that one might encounter in statistical literature (you can check another list at the end of this section).

Referring back to our discussion about Appendix C, it is time to choose the most suitable parametric family for a discrete and a binary random variable, such as the \(i\)th random variable \(D_i\). A particular case we can examine is the Bernoulli distribution (also, commonly known as a Bernoulli trial). The Bernoulli distribution applies to a discrete random variable that can take one of two values: \(0\), which we refer to as a failure, and \(1\), identified as a success. This aligns with our previous definition from Equation 2.3:

\[ d_i = \begin{cases} 1 \qquad \text{The surveyed child prefers chocolate.}\\ 0 \qquad \text{Otherwise.} \end{cases} \]

The equation above defines the chocolate preference of the \(i\)th surveyed child as a success, while another flavour—specifically vanilla in the context of our limited menu—is categorized as a failure. Thus, we can denote the support as \(d_i \in \{0, 1\}\).

We need to define our population parameter for this demand query in the context of a Bernoulli trial, which is denoted by \(\pi \in [0, 1]\). This represents the proportion of children who prefer the chocolate flavour over the vanilla flavour. In a Bernoulli trial, this parameter refers to the probability of success. Lastly, we can specify our generative model accordingly:

\[ \begin{gather*} m_D : D_i \sim \text{Bern}(\pi) \\ \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \quad \text{for $i = 1, \dots, n_d.$} \end{gather*} \]

We must define the PMF corresponding to the above generative model. The statistical literature assigns the following PMF for the a Bernoulli trial \(D_i\):

\[ P_{D_i} \left( D_i = d_i \mid \pi \right) = \pi^{d_i} (1 - \pi)^{1 - d_i} \quad \text{for $d_i \in \{ 0, 1 \}$.} \tag{2.7}\]

A further question arises regarding whether Equation 2.7 satisfies the condition of the total probability of the sample space defined in the Equation 2.6 under the definition of a PMF. This condition states that a valid PMF should result in a total probability equal to one when we sum all the probabilities produced by this function over every possible value that the random variable can take.

Hence, we can state Equation 2.7 is a proper probability distribution (i.e., all the standalone probabilities over the support of \(D_i\) add up to one) given that:

Proof. \[ \begin{align*} \sum_{d_i = 0}^1 P_{D_i} \left( D_i = d_i \mid \pi \right) &= \sum_{d_i = 0}^1 \pi^{d_i} (1 - \pi)^{1 - d_i} \\ &= \underbrace{\pi^0}_{1} (1 - \pi) + \pi \underbrace{(1 - \pi)^{0}}_{1} \\ &= (1 - \pi) + \pi \\ &= 1. \qquad \qquad \qquad \qquad \quad \square \end{align*} \tag{2.8}\]

Indeed, this Bernoulli PMF is a proper probability distribution!

The probability distribution, obtained from Equation 2.8, is summarized in Table 2.3. Note that the chocolate preference has a probability equal to \(\pi\), whereas the vanilla preference corresponds to the complement \(1 - \pi\). This probability arrangement completely fulfils the corresponding probability condition of the sample space seen in Equation 2.6.

| \(d_i\) | \(P_{D_i} \left( D_i = d_i \mid \pi \right)\) |

|---|---|

| \(0\) | \(1 - \pi\) |

| \(1\) | \(\pi\) |

To proceed with the time query, we need to analyze the \(j\)th continuous random variable \(T_j\) and subsequently work with a PDF.

Definition of probability density function (PDF)

Let \(Y\) be a continuous random variable whose support is \(\mathcal{Y}\). Furthermore, consider a function \(f_Y(y)\) such that

\[ f_Y(y) : \mathbb{R} \rightarrow \mathbb{R} \]

with

\[ f_Y(y) \geq 0. \tag{2.9}\]

Then, \(f_Y(y)\) is considered a PDF if the probability of \(Y\) taking on a value within the range represented by the subset \(A \subset \mathcal{Y}\) is equal to

\[ P_Y(Y \in A) = \int_A f_Y(y) \mathrm{d}y \]

with

\[ \int_{\mathcal{Y}} f_Y(y) \mathrm{d}y = 1. \tag{2.10}\]

To begin our second analysis, let us examine the nature of the variable \(T_j\) represented as a continuous random variable. This variable is nonnegative, meaning it is positively unbounded, as it models a waiting time. We can interpret \(T_j\) as the waiting time until a specific event of interest occurs, such as when the next customer arrives at the ice cream cart. In statistical literature, this is commonly referred to as a survival time. Hence, we might wonder:

What is the most suitable parametric family to model a survival time?

Well, in this case within our textbook and in general in statistical literature, there is more than one alternative to model a continuous and nonnegative survival time. Appendix C offers four possible ways:

-

Exponential. A random variable with a single parameter that can come in either of the following forms:

- As a rate \(\lambda \in (0, \infty)\), which generally defines the mean number of events of interest per time interval or space unit.

- As a scale \(\beta \in (0, \infty)\), which generally defines the mean time until the next event of interest occurs.

- Weibull. A random variable that is a generalization of the Exponential distribution. Note its distributional parameters are the scale continuous parameter \(\beta \in (0, \infty)\) and shape continuous parameter \(\gamma \in (0, \infty)\).

- Gamma A random variable whose distributional parameters are the shape continuous parameter \(\eta \in (0, \infty)\) and scale continuous parameter \(\theta \in (0, \infty)\).

- Lognormal. A random variable whose logarithmic transformation yields a Normal distribution. Its distributional parameters are the Normal location continuous parameter \(\mu \in (-\infty, \infty)\) and Normal scale continuous parameter \(\sigma^2 \in (0, \infty)\).

In our context, as summarized in the corresponding generative model, it is in our best interest to select a probability distribution characterized by a single parameter. Therefore, the Exponential distribution is the most suitable choice for our current time query, particularly under the scale parametrization, since we aim to estimate the waiting time between two customers.

Heads-up on survival analysis!

Although our ice cream case can be straightforwardly modelled using an Exponential distribution for our time query, by using a single population parameter which indicates a mean waiting time between two customers, it is important to stress that other distributions, such as the Weibull, Gamma, or Lognormal, are also entirely valid options. In fact, utilizing these distributions, that involve more than just a standalone parameter, can enhance the flexibility of our data modelling!

Additionally, there is a specialized statistical field focused on modelling waiting times—specifically, the time until an event of interest occurs. These types of times are formally referred to as survival times, and the associated field is known as survival analysis. It is worth noting that regression analysis can be extended to this area, and Chapter 6 will provide a more in-depth exploration of various parametric models that involve the Exponential, Weibull, and Lognormal distributions.

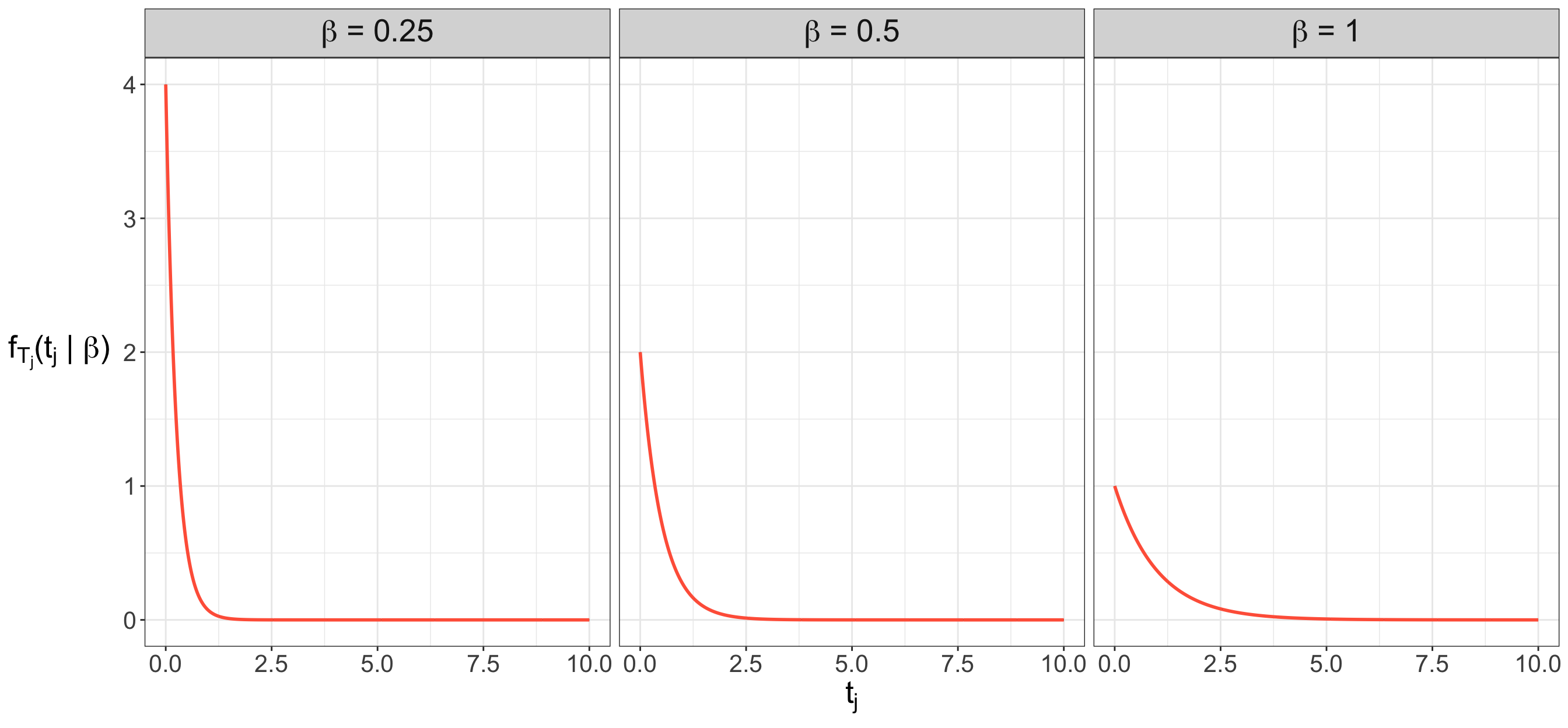

Since we are using an Exponential distribution, we need to establish our population parameter for this time query. As mentioned in Table 2.2, this parameter refers to the average (or mean) waiting time from one customer to the next. This corresponds to a scale parametrization, where the parameter \(\beta \in (0, \infty)\) defines the mean time until the next event of interest occurs (in this case, the next customer). Therefore, we can specify our generative model as follows:

\[ \begin{gather*} m_T : T_j \sim \text{Exponential}(\beta) \\ \qquad \qquad \qquad \qquad \qquad \qquad \qquad \qquad \quad \text{for $j = 1, \dots, n_t.$} \end{gather*} \]

Since \(T_j\) is a continuous random variable, we must define the PMF corresponding to the above generative model. The statistical literature assigns the following PDF for \(T_j\):

\[ f_{T_j} \left(t_j \mid \beta \right) = \frac{1}{\beta} \exp \left( -\frac{t_j}{\beta} \right) \quad \text{for $t_j \in [0, \infty )$.} \tag{2.11}\]

Now, we might wonder whether Equation 2.11 satisfies the condition of the total probability of the sample space defined in the Equation 2.10 under the definition of a PDF. This condition states that a valid PDF should result in a total probability equal to one when we integrate this function over all the support of \(T_j\).

Thus, we can state that Equation 2.11 is a proper probability distribution (i.e., Equation 2.11 integrates to one over the support of \(T_j\)) given that:

Proof. \[ \begin{align*} \int_{t_j = 0}^{t_j = \infty} f_{T_j} \left(t_j \mid \beta \right) \mathrm{d}y &= \int_{t_j = 0}^{t_j = \infty} \frac{1}{\beta} \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j \\ &= \frac{1}{\beta} \int_{t_j = 0}^{t_j = \infty} \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j \\ &= - \frac{\beta}{\beta} \exp \left( -\frac{t_j}{\beta} \right) \Bigg|_{t_j = 0}^{t_j = \infty} \\ &= - \exp \left( -\frac{t_j}{\beta} \right) \Bigg|_{t_j = 0}^{t_j = \infty} \\ &= - \left[ \exp \left( -\infty \right) - \exp \left( 0 \right) \right] \\ &= - \left( 0 - 1 \right) \\ &= 1. \qquad \qquad \qquad \qquad \quad \square \end{align*} \tag{2.12}\]

Indeed, the Exponential PDF, under a scale parametrization, is a proper probability distribution!

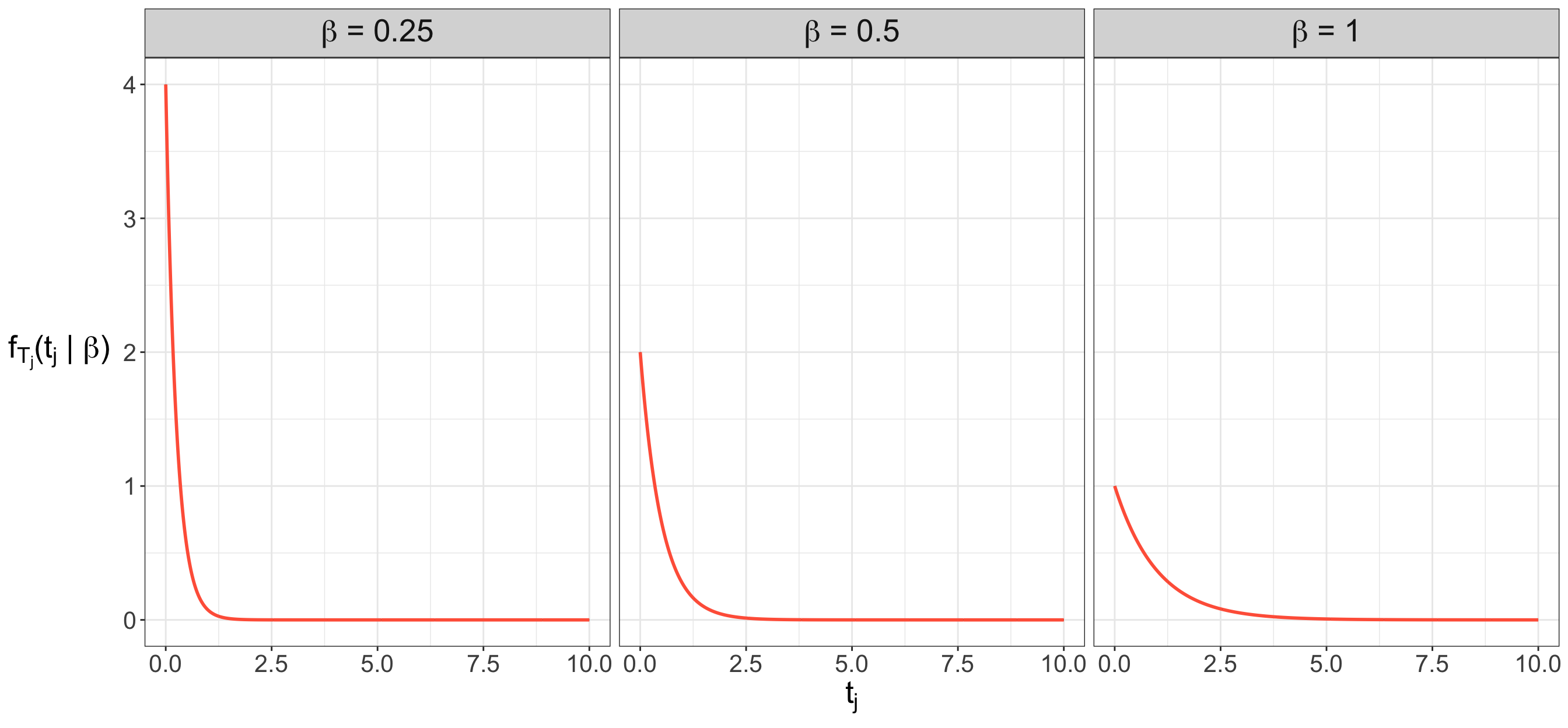

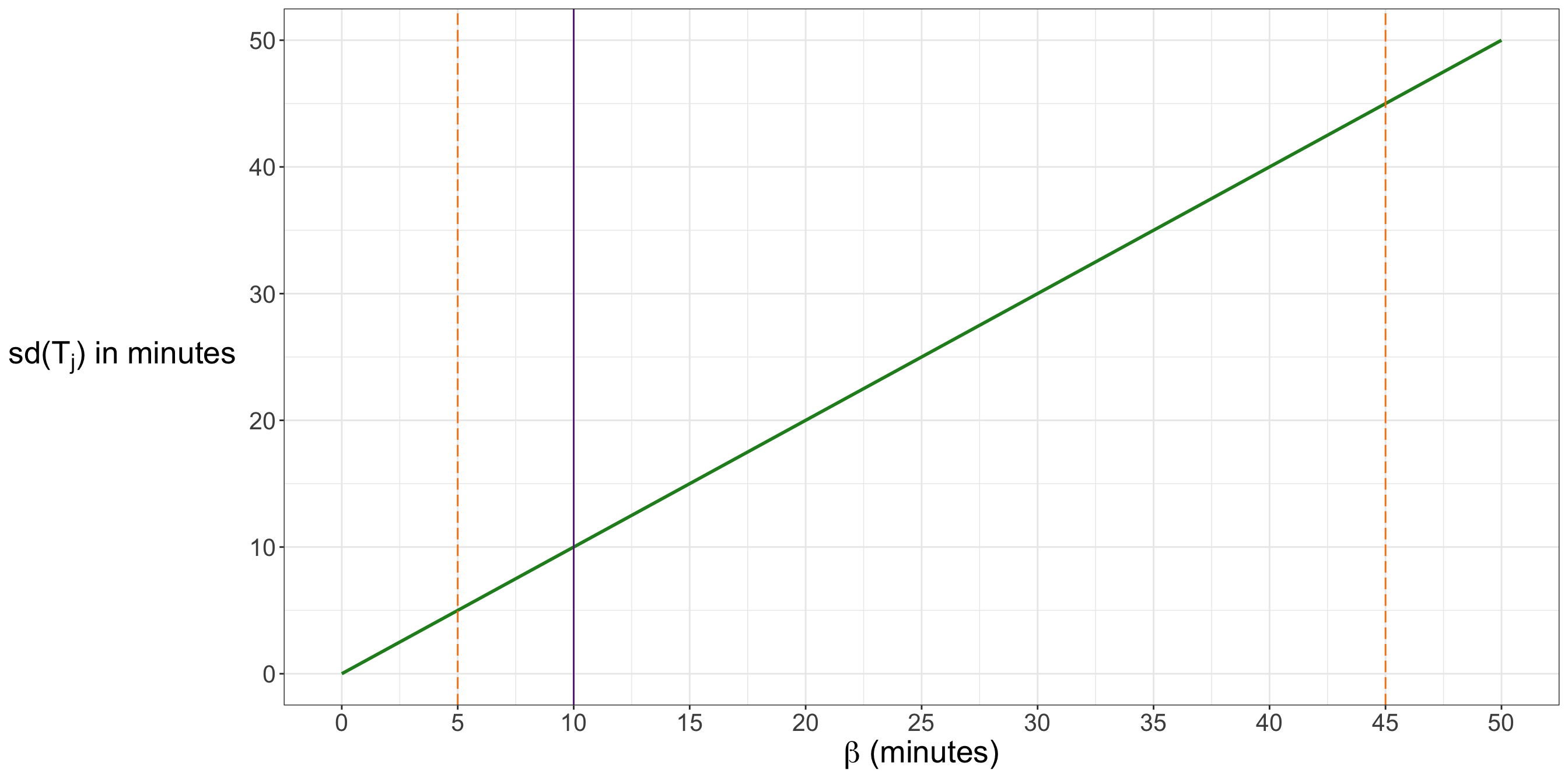

Unlike our demand query, which features a table illustrating the PMF for \(D_i \in \{ 0, 1 \}\) (see Table 2.3), it is not feasible to create a table for the PDF of \(T_j \in [0, \infty)\) because it represents an uncountably infinite set of possible values. However, we can plot the corresponding PDF using three specific members of the Exponential parametric family as examples. Figure 2.2 presents these three example members, with scale parameters values of \(\beta = 0.25, 0.5, 1\) minutes, representing waiting times through their corresponding PDFs. Based on our findings in Equation 2.12, we know that the area under these three density plots equals one, indicating the total probability of the sample space. Additionally, it is important to note that as we increase the scale parameter, larger observed values \(t_j\) become more probable.

2.1.5 Characterizing Probability Distributions

Before moving on into our distributional journey, let us update Table 2.2 with the specific probability distributions, and mathematical definitions of the parameters to estimate per query.

| Demand Query | Time Query | |

|---|---|---|

| Statement | We would like to know which ice cream flavour is the favourite one (either chocolate or vanilla) and by how much. | We would like to know the average waiting time from one customer to the next one in any given ice cream cart. |

| Population of interest | Children between 4 and 11 years old attending different parks in Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends. | All our general customer-to-customer waiting times in the different parks of Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during Summer weekends across the 900 ice cream carts. |

| Parameter | Proportion of individuals from the population of interest who prefer the chocolate flavour versus the vanilla flavour. | Average waiting time in minutes from one customer to the next one. |

| Random variable | \(D_i\) for \(i = 1, \dots, n_d\). | \(T_j\) for \(j = 1, \dots, n_t\). |

| Random variable definition | A favourite ice cream flavour of a randomly surveyed \(i\)th child between 4 and 11 years old attending the parks of Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal during the Summer weekends. | A randomly recorded \(j\)th waiting time in minutes between two customers during a Summer weekend across the 900 ice cream carts found in Vancouver, Victoria, Edmonton, Calgary, Winnipeg, Ottawa, Toronto, and Montréal. |

| Random variable type | Discrete and binary. | Continuous and positively unbounded. |

| Random variable support | \(d_i \in \{ 0, 1\}\) as in Equation 2.3. | \(t_j \in [0, \infty).\) |

| Probability distribution | \(D_i \sim \text{Bern}(\pi)\) | \(T_j \sim \text{Exponential}(\beta)\) |

| Mathematical definition of the parameter | \[\pi\] | \[\beta\] |

Let us proceed, then. We have been exploring the basics of random variables, as well as the importance of generative modelling and probability distributions in addressing different data inquiries. These concepts are fundamental to understanding the population parameter setup before we actually collect data and solve these inquiries to create effective storytelling. Therefore, before we delve into those stages, however, we need to identify and explain efficient ways to summarize probability distributions. This will help us make our storytelling compelling for a general audience, as we will discuss further.

To continue with this example, we need to use R and Python code. Thus, we will work with some simulated populations to create the corresponding proofs of concept in this section and the subsequent ones. Let us start with our demand query. We will consider a population size of \(N_d = 2,000,000\) children (whose characteristics are defined in Table 2.4). The code (in either R or Python) below assigns this value as N_d, along with a simulation seed to ensure our results are reproducible. Additionally, for the simulation purposes related to our generative modelling, we will assume that \(65\%\) of these children in this population prefer chocolate over vanilla (i.e., \(\pi = 0.65\)).

Heads-up on real and unknown parameters!

Although we are assigning a value of \(\pi = 0.65\) as our true population parameter in this query, we can never know the exact value in practice unless we conduct a full census. This is why we rely on probabilistic tools, via random sampling and statistical inference, to estimate this \(\pi\) in frequentist statistics.

Let us recall that we are assuming each child as a Bernoulli trial, where a success (denoted as 1) indicates that the child “prefers chocolate.” This also reflects the flavour mapping in the code. Furthermore, instead of using a Bernoulli random number generator, we are utilizing a Binomial random number generator. This is because the Binomial case with parameters \(n = 1\) and \(\pi\) is equivalent to a Bernoulli trial with parameter \(\pi\). Hence, consider the following Binomial case:

\[ Y \sim \text{Bin}(n = 1, \pi), \]

whose PMF is simplified as a Bernoulli given that

\[ \begin{align*} P_Y \left( Y = y \mid n = 1, \pi \right) &= {1 \choose y} \pi^y (1 - \pi)^{1 - y} \\ &= \underbrace{\frac{1!}{y!(1 - y)!}}_{\text{$1$ for $y \in \{ 0, 1 \}$}} \pi^y (1 - \pi)^{1 - y} \\ &= \pi^y (1 - \pi)^{1 - y} \\ & \qquad \qquad \qquad \qquad \qquad \text{for $y \in \{ 0, 1 \}$.} \end{align*} \tag{2.13}\]

The final output of this quick simulation, which models a population of \(N_d\) children as Bernoulli trials with a probability of success \(\pi = 0.65\), consists of a data frame containing \(N_d = 2,000,000\) rows, with each row representing a child and their preferred ice cream flavour: either chocolate or vanilla. It is worth noting that the outputs from both R and Python differ due to the fact that each language employs different pseudo-random number generators (even though we use the same seed). Note that Python additionally uses the {numpy} and {pandas} libraries.

set.seed(123) # Seed for reproducibility

# Population size

N_d <- 2000000

# Simulate binary outcomes: 1 = chocolate, 0 = vanilla

flavour_bin <- rbinom(N_d, size = 1, prob = 0.65)

# Map binary to flavour names

flavours <- ifelse(flavour_bin == 1, "chocolate", "vanilla")

# Create data frame

children_pop <- data.frame(

children_ID = 1:N_d,

fav_flavour = flavours

)

# Showing the first 100 children of the population

head(children_pop, n = 100) # Importing libraries

import numpy as np

import pandas as pd

np.random.seed(123) # Seed for reproducibility

# Population size

N_d = 2000000

# Simulate binary outcomes: 1 = chocolate, 0 = vanilla

flavour_bin = np.random.binomial(n = 1, p = 0.65, size = N_d)

# Map binary to flavour names

flavours = np.where(flavour_bin == 1, "chocolate", "vanilla")

# Create data frame

children_pop = pd.DataFrame({

"children_ID": np.arange(1, N_d + 1),

"fav_flavour": flavours

})

# Showing the first 100 children of the population

print(children_pop.head(100))In our time query, we will simulate another population consisting of \(N_t = 500,000\) general customer-to-customer waiting times (as defined in Table 2.4). The code below assigns this population size to the variable N_t. We have already established that this class of data will be modelled using an Exponential distribution under a scale parameterization, where the parameter \(\beta\) defines the mean waiting time between customers. For this query, we will assume that the population of waiting times has a true parameter value of \(\beta = 10\) minutes. Therefore, the code below illustrates this generative modelling mechanism, which produces a data frame containing \(N_t = 500,000\) rows, with each row representing a specific waiting time in minutes.

set.seed(123) # Seed for reproducibility

# Population size

N_t <- 500000

# In R, 'rate' is 1 / scale and rounding to two decimal places

waiting_times <- round(rexp(N_t, rate = 1 / 10), 2)

# Create data frame

waiting_pop <- data.frame(

time_ID = 1:N_t,

waiting_time = waiting_times

)

# Showing the first 100 waiting times of the population

head(waiting_pop, n = 100)np.random.seed(123) # Seed for reproducibility

# Population size

N_t = 500000

# Simulate waiting times

waiting_times = np.round(np.random.exponential(scale = 10, size = N_t), 2)

# Create DataFrame

waiting_pop = pd.DataFrame({

"time_ID": np.arange(1, N_t + 1),

"waiting_time": waiting_times

})

# Showing the first 100 waiting times of the population

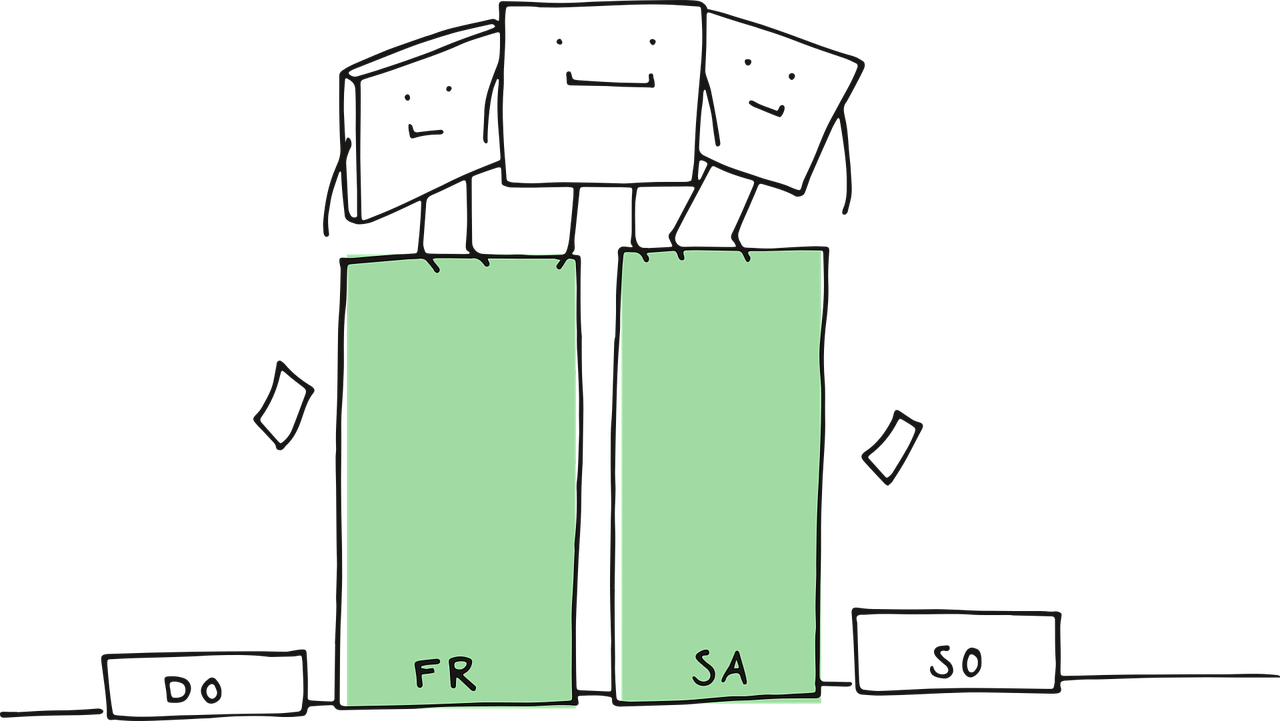

print(waiting_pop.head((100))Imagine that the data collection and analysis for the ice cream case have progressed into the future. You have a follow-up meeting with the eight general managers, one from each Canadian city, to discuss the statements related to both demand and time queries, in relation to our populations of interest. Additionally, you have collected data from a sample of \(n_d = 500\) randomly surveyed children across these eight Canadian cities. Note that the below R and Python sampling functions perform simple random sampling with replacement.

np.random.seed(678) # Seed for reproducibility

# Simple random sample of 500 children with replacement

n_d = 500

children_sample = children_pop.sample(n = n_d, replace = True)

# Showing the first 100 sampled children

print(children_sample.head(100))Also, you have sampled data on \(n_t = 200\) randomly recorded waiting times between customers across our 900 ice cream carts in the same cities.

np.random.seed(345) # Seed for reproducibility

# Simple random sample of 200 waiting times with replacement

n_t = 200

waiting_sample = waiting_pop.sample(n = n_t, replace = True)

# Showing the first 100 sampled waiting times

print(waiting_sample.head(100))9.6685In terms of the executive meeting with the eight general managers, it would not be an efficient use of time to go individually over these \(n_d = 500\) and \(n_t = 200\) data points along with abstract mathematical concepts such as PMFs or PDFs, as well as probabilistic definitions of random variables and parameters represented by Greek letters. Instead, there should be a more straightforward and simple way to explain how these \(n_d\) and \(n_t\) observed random variables behaved during our data collection process. The key to addressing this complexity lies in understanding measures of central tendency and uncertainty.

Heads-up on population and sample-based measures of central tendency and uncertainty!

When learning about measures of central tendency and uncertainty, it is best to begin with those directly related to our population(s) of interest. These concepts provide valuable insights into how any given population behaves concerning typical values and spread. Subsequently, through sampled data, we can derive estimates for these population-based measures.

In this section, we will focus on the population measures, while Section 2.2 and Section 2.3 will examine the sample-based measures, which are simply the corresponding estimates. Of course, the latter measures will eventually be used in the upcoming executive meeting within the ice cream case. But, for now, let us immerse ourselves a bit into the fundamentals behind the population side of things.

Our first measure to explore is related to typical values found within any given population, specifically a measure of central tendency. We can think of this measure as representing “the most common value” we can expect when observing a certain number of random variables that are drawn from this particular population. Let us start with its formal definition.

Definition of measure of central tendency

Probabilistically, a measure of central tendency is defined as a metric that identifies a central or typical value of a given probability distribution. In other words, a measure of central tendency refers to a central or typical value that a given random variable might take when we observe various realizations of this variable over a long period.

There is more than one measure of central tendency in the statistical literature. However, for the regression models discussed in this book, we will focus on the expected value (see the definition below), which is commonly referred to as the average or mean. You will notice that this measure is closely related to the mainstream average we use in our everyday life (to be discussed later on in this section).

Definition of expected value

Let \(Y\) be a random variable whose support is \(\mathcal{Y}\). In general, the expected value or mean \(\mathbb{E}(Y)\) of this random variable is defined as a weighted average according to its corresponding probability distribution. In other words, this measure of central tendency \(\mathbb{E}(Y)\) aims to find the middle value of this random variable by weighting all its possible values in its support \(\mathcal{Y}\) as dictated by its probability distribution.

Given the above definition, when \(Y\) is a discrete random variable whose PMF is \(P_Y(Y = y)\), then its expected value is mathematically defined as

\[ \mathbb{E}(Y) = \sum_{y \in \mathcal{Y}} y \cdot P_Y(Y = y). \tag{2.14}\]

When \(Y\) is a continuous random variable whose PDF is \(f_Y(y)\), its expected value is mathematically defined as

\[ \mathbb{E}(Y) = \int_{\mathcal{Y}} y \cdot f_Y(y) \mathrm{d}y. \tag{2.15}\]

Tip on further measures of central tendency!

In addition to the expected value, there are other measures that will not be explored in this book such as:

- Mode: For a discrete random variable, the mode is the outcome that corresponds to the highest probability in the PMF. In the case of a continuous random variable, the mode refers to the outcome at which the maximum value occurs in the corresponding PDF.

- Median: This measure primarily relates to continuous random variables. The median is the outcome for which there is a probability of \(0.5\) for observing a value either greater or lesser than it.

Note that the discrete random variable case in Equation 2.14 somehow resembles the mainstream average, which actually would assign an equal weight to each possible outcome of the random variable. On the other hand, for the above statistical definition, we use the corresponding PMF to assign these weights by possible outcome of the discrete random variable.

Let us exemplify this by using our demand query. Recall that the \(i\)th discrete random variable (as in Table 2.4) \(D_i\) is distributed as follows:

\[ D_i \sim \text{Bern}(\pi), \]

whose PMF is defined as

\[ P_{D_i} \left( D_i = d_i \mid \pi \right) = \pi^{d_i} (1 - \pi)^{1 - d_i} \quad \text{for $d_i \in \{ 0, 1 \}$.} \]

Now, by applying Equation 2.14, note we have the following result (which is in fact the formal proof of the expected value of Bernoulli trial):

Proof. \[ \begin{align*} \mathbb{E}(D_i) &= \sum_{d_i = 0}^1 d_i P_{D_i} \left( D_i = d_i \mid \pi \right) \\ &= \sum_{d_i = 0}^1 d_i \left[ \pi^{d_i} (1 - \pi)^{1 - d_i} \right] \\ &= \underbrace{(0) \left[ \pi^0 (1 - \pi) \right]}_{0} + (1) \left[ \pi (1 - \pi)^{0} \right] \\ &= 0 + \pi \\ &= \pi. \qquad \qquad \qquad \qquad \qquad \qquad \quad \square \end{align*} \tag{2.16}\]

For the specific case of a Bernoulli-type population, we have just found that its expected value (from Equation 2.16) is equal to the corresponding parameter we aim to estimate, which is \(\pi\). Then, you might wonder:

In practical terms for our ice cream company, how can I explain \(\mathbb{E}(D_i) = \pi\)?

Suppose you sample a sufficiently large number of children (i.e., your sample size \(n_d \rightarrow \infty\)) from a Bernoulli-type population where \(65\%\) of these children prefer chocolate over vanilla (i.e., \(\pi = 0.65\)). Then, you will obtain the proportion of observed random variables \(d_i\) (for \(i = 1, \dots, n_d\)) that correspond to \(1\) (as in Equation 2.3) which is merely a mainstream average. Theoretically speaking, according to our proof in Equation 2.16, this observed proportion should converge to the true population parameter \(\pi = 0.65\).

Let us now explore the expected value for a continuous random variable using our time query. Unlike the previous case depicted in Equation 2.14, the continuous counterpart in Equation 2.15 cannot utilize a summation. Instead, since we have an uncountably infinite set of possible values for a continuous random variable, we must use an integral. This integral involves the corresponding PDF, which weights all possible observed outcomes of the continuous random variable in conjunction with the differential.

Moving along in this query, recall the \(j\)th continuous random variable (as in Table 2.4) \(T_j\) is distributed as follows:

\[ T_j \sim \text{Exponential}(\beta), \]

whose PDF is defined as

\[ f_{T_j} \left(t_j \mid \beta \right) = \frac{1}{\beta} \exp \left( -\frac{t_j}{\beta} \right) \quad \text{for $t_j \in [0, \infty )$.} \]

Now, by applying Equation 2.15, note we have the following result (which is the formal proof of the expected value of an Exponential-distributed random variable under a scale parametrization):

Proof. \[ \begin{align*} \mathbb{E}(T_j) &= \int_{t_j = 0}^{t_j = \infty} t_j f_{T_j} \left(t_j \mid \beta \right) \mathrm{d}t_j \\ &= \int_{t_j = 0}^{t_j = \infty} \frac{t_j}{\beta} \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j \\ &= \frac{1}{\beta} \int_{t_j = 0}^{t_j = \infty} t_j \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j. \\ \end{align*} \tag{2.17}\]

Equation 2.17 cannot be solved straightforwardly, we need to use integration by parts as follows:

\[ \begin{align*} u &= t_j & &\Rightarrow & \mathrm{d}u &= \mathrm{d}t_j \\ \mathrm{d}v &= \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j & &\Rightarrow & v &= -\beta \exp \left( -\frac{t_j}{\beta} \right), \end{align*} \]

which yields

\[ \begin{align*} \mathbb{E}(T_j) &= \frac{1}{\beta} \left[ u v \Bigg|_{t_j = 0}^{t_j = \infty} - \int_{t_j = 0}^{t_j = \infty} v \mathrm{d}u \right] \\ &= \frac{1}{\beta} \Bigg\{ \left[ -\beta t_j \exp \left( -\frac{t_j}{\beta} \right) \right] \Bigg|_{t_j = 0}^{t_j = \infty} + \\ & \qquad \beta \int_{t_j = 0}^{t_j = \infty} \exp \left( -\frac{t_j}{\beta} \right) \mathrm{d}t_j \Bigg\} \\ &= \frac{1}{\beta} \Bigg\{ -\beta \Bigg[ \underbrace{\infty \times \exp(-\infty)}_{0} - \underbrace{0 \times \exp(0)}_{0} \Bigg] - \\ & \qquad \beta^2 \exp \left( -\frac{t_j}{\beta} \right) \Bigg|_{t_j = 0}^{t_j = \infty} \Bigg\} \\ &= \frac{1}{\beta} \left\{ -\beta (0) - \beta^2 \left[ \exp \left( -\infty \right) - \exp \left( 0 \right) \right] \right\} \\ &= \frac{1}{\beta} \left[ 0 - \beta^2 (0 - 1) \right] \\ &= \frac{\beta^2}{\beta} \\ &= \beta. \qquad \qquad \qquad \qquad \qquad \qquad \quad \qquad \qquad \square \end{align*} \tag{2.18}\]

Again, for the specific case of an Exponential-type population, we have just found that the its expected value (from Equation 2.18) is equal to the corresponding parameter we aim to estimate, which is \(\beta\). This yields the following question:

In practical terms for our ice cream company, how can I explain \(\mathbb{E}(T_j) = \beta\)?

Imagine you sample a sufficiently large number of customer-to-customer waiting times (i.e., your sample size \(n_t \rightarrow \infty\)) from an Exponential-type population where the true average waiting time is \(\beta = 10\) minutes. Then, you will obtain the mainstream average coming from these observed random variables \(t_j\) (for \(j = 1, \dots, n_t\)). Theoretically speaking, according to our proof in Equation 2.18, this observed mainstream average should converge to the true population parameter \(\beta = 10\).

Heads-up on the so-called mainstream average!

In general, suppose that you obtain \(n\) realizations \(y_k\) (for \(k = 1, \dots, n\)) of a given random variable \(Y\). The observed mainstream average is given by

\[ \bar{y} = \frac{\sum_{k = 1}^n y_k}{n}. \tag{2.19}\]

For the respective classes of observed random variables in the demand and time queries, Equation 2.19 can be applied to obtain estimates of the corresponding population parameters \(\pi\) and \(\beta\). The statistical rationale for using Equation 2.19 will be expanded in Section 2.2.

We have discussed measures of central tendency in detail, more specifically the mean, which represents typical values derived from observing a standalone random variable over a sufficiently large number of trials, denoted as \(n\). Interestingly, there is a useful probabilistic theorem that allows us to calculate expected values for general mathematical functions involving a standalone random variable. This theorem is crucial for obtaining another type of measure related to the spread of a random variable.